Have you ever noticed some strange traffic patterns on your website's analytics? Pages being crawled rapidly or hit counts skyrocketing in a matter of minutes? Chances are, that was more than just an influx of eager human visitors.

Automated programs, known as bots, constantly scour the internet. Estimates suggest that these bots accounted for anywhere from 47% to 73% of all internet traffic in 2023. Some of these bots, like search engines, are benign, but others can seriously threaten your site's security and performance.

Dealing with malicious bot traffic can be an everyday occurrence for online businesses. Whether it's scraper bots stealing your content or credential stuffing attacks trying to hack user accounts. Failing to detect and block nefarious bots can decrease your site's speed, make resources unavailable to legitimate users, and put your business at risk.

Thankfully, there are effective techniques to identify and stop bad bot traffic. This article will cover how to spot the telltale signs of bot traffic, why it can be a problem, and proven methods to stop nefarious automated visitors.

What is bot detection?

Bot detection actively determines if a website's activity comes from human users or automated software programs, known as bots. Bots, coded to perform specific tasks and crawl websites, can operate at a speed far exceeding human capabilities.

At its core, bot detection involves analyzing various attributes of website requests and user sessions to determine if the visitor is a bot. Detection typically requires monitoring dozens of potential bot signals like browser details, mouse movements, scrolling behavior, HTTP headers, and request rates.

By establishing a baseline for human user activity, advanced bot detection solutions can identify anomalies that suggest an automated bot is accessing your site. Machine learning models often evaluate these signals and score each website visitor as likely human or bot.

Organizations can then use this bot detection information to block malicious bots, challenge suspected bots for human verification, or better monitor and understand their traffic.

Why are bots used?

There are many different reasons for using bots to access websites. Search engines like Google, Bing, and Yahoo employ crawlers to constantly scan the web, indexing content to provide data for their search platforms. Price comparison sites could use bots to monitor pricing across multiple websites to find the best deal or notify users of price drops.

However, fraudsters also use bots for malicious purposes, such as launching credential stuffing attacks that rapidly use stolen login credentials to gain unauthorized access to user accounts. Spam distribution is another nefarious use case, with bots scouring sites looking for ways to post junk comments, links, and other unwanted content.

Why is bot detection important?

While some usage of bots is legitimate, others are malicious or violate terms of service. The potential consequences of not identifying and managing bot traffic on your site can be severe and far-reaching.

Regardless of intent, bots can overwhelm your servers, skew your analytics, scrape proprietary data, and enact multiple types of fraud if not detected and appropriately managed. Whether you want to protect your data, ensure accurate analytics, prevent fraud, or maintain optimal performance, having insight into your site's automated traffic is essential.

Some of the key reasons to implement effective bot detection measures include:

Protection Against Bot Attacks

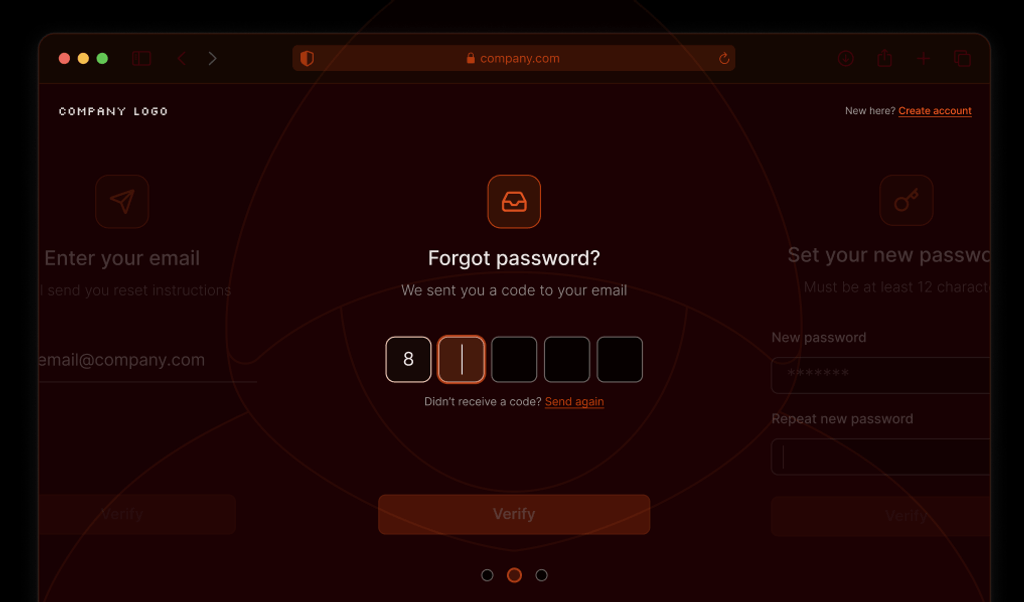

Attackers often use bots to launch attacks on sites, such as credential stuffing attacks attempting to breach user accounts and steal data and even distributed denial-of-service (DDoS) attacks trying to take your website down. Monitoring malicious bot traffic is a critical defensive measure against such attacks.

Fraud Prevention

Bots are a lucrative tool for committing fraud, enabling fraudsters to bypass protection measures and manipulate transactions at scale. Online payment fraud, account creation fraud, and coupon and signup promo abuse are just some examples. Bot detection helps unmask these automated threats to prevent lasting damage and financial losses for your business.

Content Protection

If you have a content or media website with valuable data, effective bot detection is essential for protecting your proprietary data from being scraped and shared elsewhere. You can block the activity by identifying bots trying to scrape and copy your content and protect your intellectual property.

User Experience and Performance

At high volumes, malicious bot traffic can severely degrade website performance by overloading servers with excessive quick requests. This results in slow load times, errors, and a frustrating experience for legitimate human visitors. Detecting and blocking bad bots prevents negative impacts on user experience and site operation.

Compliance

In highly regulated industries like finance, healthcare, and education, there can be strict data privacy and security compliance requirements around user data and system activity monitoring. Maintaining visibility into your traffic sources, including differentiating between humans and bots, is essential for auditing access and proving compliance.

Analytics Accuracy

Having a lot of unknown bot traffic can skew your website's analytics data, distorting metrics like page views, sessions, conversion rates, and more. This bot traffic makes it challenging to make informed decisions based on how real, legitimate human users interact with your site. Accurate bot detection and filtering give you a realistic picture of your website's performance. You may even find new insights on how your website is accessed or places to add new APIs.

Signs indicating you may have bot traffic

While bot detection tools provide definitive information on each visitor, there are some telltale signs and anomalies that may let you know automated robots are accessing your site:

- Spike in Traffic: A sudden surge of traffic, especially from cloud hosting providers like AWS or data center IP ranges, can point to a botnet (a group of bots) visiting your site. These types of traffic spikes are usually unnatural for human visitor patterns.

- High Bounce Rates & Short Sessions: If you see many sessions with a single page view and almost no time spent on your site, it could suggest crawler bots rapidly hitting your pages without engaging with the content, as humans would.

- Strange Conversion Patterns: Are you seeing a lot of successful email newsletter signups or purchases but little to no matching engagement with your site? These conversion patterns could indicate bots programmatically submit forms or place bogus orders.

- Impossible Analytics: Are you seeing incredibly unusual metrics like billions of page views or sessions from browser versions that don't exist yet? These extreme, irrational patterns can signify sophisticated bots attempting to appear like real users and traffic.

- Scraped Data Replicas: Some bots copy the entire source code of your web pages. If you find instances of your site's code or content appearing elsewhere verbatim, that's a red flag for content scraping bot activity.

Effective techniques for bot detection

Simply looking for red flags like those above is insufficient to detect and handle bot traffic reliably. Fraudsters constantly evolve their bots to mimic human behaviors and evade basic detection methods.

The most robust bot detection combines techniques that look at technical characteristics and behavioral data. To stay ahead of sophisticated bots, website owners need to use advanced, multi-layered bot detection techniques such as:

Interaction-Based Verification

Challenge-based Validation

Add challenge-based validation to serve as a way to prove the user is human. You may present suspected bots with CAPTCHAs, human validation questions, browser rendering tests, audio/visual challenges, and other tests that modern bots find difficult to solve. However, note that some verification methods add friction for real humans.

Honeypots

Set traps that are not visible to human users browsing normally, but are likely to be interacted with by bots. For example, a hidden form still accessible in the site's HTML code might attract bot submissions. These submissions can then flag automated visitors, prompting for further review or immediate blocking.

Behavioral Analysis

Single Page Interaction

Examine user behavior on individual pages by monitoring mouse movements, scrolling cadences, and engagement with page elements. Look for variances typical of human interaction, like pausing before clicking, uneven scroll speeds, or varying engagement levels with different page areas. Bots exhibit overly consistent behavior across these activities instead of displaying the natural randomness of human activity.

Navigation and Dwell Time

Analyze how users move between pages and the time spent on each page. Human users generally show variability in their navigation patterns, including the sequence of pages visited and the time spent on each, reflecting genuine interest or searching for information. Bots tend to access numerous pages in quick succession without variations in timing.

Form Completion Behavior

Look at how visitors are completing form submissions. Unlike humans, bots can fill out multiple inputs instantly and might use repetitive or nonsensical data or predictable sequences of characters. Look for telltale signs that the visitor filling in the form is human, like making typos and fixing them or skipping optional fields that a bot might not recognize as optional.

Attribute Intelligence and Recognition

Machine Learning

Train machine learning models on massive datasets of past human and bot interactions. By analyzing billions of data points on user journeys, mouse movements, cognitive processing times, and browser characteristics, these models can learn and adapt to identify behaviors indicative of bots versus real users in real time. These models can dynamically retrain across different data and traffic sources as bots evolve their techniques.

Browser and Device Analysis

Look at the characteristics of the client browser and the device hardware and software configuration to create normal baselines and unmask bots. For browsers, sites can analyze how the client renders pages, executes JavaScript, processes audiovisual elements, and handles other interactive tasks to spot deviations from natural browser behavior. On the device side, sites can evaluate attributes like screen dimensions, OS, language, CPU/memory usage, graphics rendering capabilities, and more. Significant deviations from known baselines represent likely bots masquerading as legitimate devices and browsers.

Access Methods and Patterns

IP Blocklist

Use a bot detection solution that offers regularly updated databases of known bot IPs, data center ranges, malicious proxies, and other nefarious address sources associated with bot activity. While they do not provide a complete solution, since bot IPs constantly rotate, integrating these dynamic IP blocklists adds another strong verification signal for identifying bad bots.

Accessing Suspicious URLs

Monitor for unusual access patterns, such as repeated attempts to discover hidden or unprotected login pages to reveal potential bot attempts to exploit website vulnerabilities. This behavior is usually systematic, more persistent than a typical user, and follows predictable URL patterns.

Detecting bot traffic with Fingerprint

While the techniques outlined above are highly effective at detecting bots, building and maintaining these capabilities in-house can be impractical for many companies.

Training effective machine learning models requires massive computing resources and global data far beyond what a single website can access. Accurately analyzing behavior and devices is complex, IP threat databases quickly become outdated, and CAPTCHAs degrade the user experience for actual humans.

Fingerprint is a device intelligence platform that provides highly accurate browser and device identification. Our bot detection signal collects large amounts of browser data that bots leak (errors, network overrides, browser attribute inconsistencies, API changes, and more) to reliably distinguish genuine users from headless browsers, automation tools, and more.

We also provide a suite of Smart Signals for detecting potentially suspicious behaviors like browser tampering, VPN, and virtual machine use to help companies develop strategies to protect their websites from fraudsters.

Using our bot detection signal, companies can quickly determine whether a visitor is a malicious bot and take appropriate action, such as blocking their IP, withholding content, or asking for human verification.

The following tutorial will go through an example of how to detect a bad bot using Fingerprint Pro.

Making an identification request

The first step is to request the Fingerprint API to identify the visitor when they visit the page. We suggest you make the request when a visitor is accessing sensitive or valuable data for bot detection. For other fraud prevention scenarios, you may want to make the identification request when a visitor takes a specific action, like making a purchase or creating an account.

To begin, add the Fingerprint Pro JavaScript agent to your page. Alternatively, you can use one of

our front-end libraries if your front end uses popular frameworks like React.js or Svelte. Request the visitor identification when the page loads and send the requestId to your application server.

// Initialize the agent.

const fpPromise = import("https://fpjscdn.net/v3/PUBLIC_API_KEY").then(

(FingerprintJS) =>

FingerprintJS.load({

endpoint: "https://metrics.yourdomain.com", // Optional

})

);

// Collect browser signals and request visitor identification

// from the Fingerprint API. The response contains a requestId.

const { requestId } = await (await fpPromise).get();

// Send the requestId to your back end.

const response = await fetch("/api/bot-detection", {

method: "POST",

body: JSON.stringify({ requestId }),

headers: {

"Content-Type": "application/json",

Accept: "application/json",

},

});We recommend routing requests to Fingerprint's APIs through your domain for production deployments using the optional endpoint parameter. This routing prevents ad blockers from disrupting identification requests and improves accuracy. We offer many ways to do this, which you can learn more about in our guide on protecting your JavaScript agent from ad blockers.

Note: Fingerprint actively collects signals from a browser to detect bots and is best suited to protect data endpoints accessible from your website, as demonstrated in this article. It is not designed to protect server-rendered or static content sent to the browser on the initial page load because browser signals are unavailable during server-side rendering.

Validating the visitor identifier

Using the Fingerprint Server API, you should perform the following steps in your back end. You can also use one of our SDKs if your back end uses Node.js or other popular server-side frameworks or languages.

To access the Server API, you must use your Secret API Key. You can obtain yours from the Fingerprint dashboard at Dashboard > App Settings > API Keys. Start a free trial if you do not currently have an account.

You should validate the identification information before determining if the visitor is a bot or a human. It's good to check that the requestId you received is legitimate by using the Server API /events endpoint. This endpoint allows you to get additional data for an identification request. First, get the data from the server.

app.post('/api/bot-detection', async (req, res) => {

const { requestId } = req.body;

const fpServerEventUrl = `https://api.fpjs.io/events/${requestId}`;

const requestOptions = {

method: 'GET',

headers: {

'Auth-API-Key': 'SECRET_API_KEY',

},

};

// Get the detailed visitor data from the Server API.

const fpServerEventResponse = await fetch(fpServerEventUrl, requestOptions);The Server API response must contain information about this specific identification request. If the request for this information fails or doesn't include the visitor information, the request might have been tampered with, and we don't trust this identification attempt.

// If there's something wrong with the provided data,

// the Server API will return a non-2xx response.

if (fpServerEventResponse.status < 200 || fpServerEventResponse.status > 299) {

// Handle the error internally and/or flag the new user for suspicious activity.

reportSuspiciousActivity(req);

return getErrorResponse(

res,

"Request identifier not found, potential spoofing detected."

);

}

// Get the visitor data from the response.

const requestData = await fpServerEventResponse.json();

const visitorData = requestData?.products?.identification?.data;

// The returned data must have the expected properties.

if (requestData.error || visitorData?.visitorId == undefined) {

reportSuspiciousActivity(req);

return getErrorResponse(res, "Error with visitor identifier.");

}A fraudster might have acquired a valid request ID via phishing. Check the freshness of the identification request to prevent replay attacks.

// The identification event must be a maximum of 3 seconds old.

if (new Date().getTime() - visitorData.created_at > 3000) {

reportSuspiciousActivity(req);

return getErrorResponse(res, "Old visit detected, potential replay attack.");

}We also want to check if the page request comes from the same IP address as the identification request.

// This is an example of obtaining the client's IP address.

// In most cases, it's a good idea to look for the right-most

// external IP address in the list to prevent spoofing.

if (req.headers["x-forwarded-for"].split(",")[0] !== visitorData.ip) {

reportSuspiciousActivity(req);

return getErrorResponse(

res,

"IP mismatch detected, potential spoofing attack."

);

}Next, check if the identification request comes from a known origin and if the request's origin corresponds to the origin provided by the Fingerprint Pro Server API. Adding Request Filtering in the Fingerprint dashboard is also an excellent idea to filter out unwanted API requests.

const ourOrigins = ["https://yourdomain.com"];

const visitorDataOrigin = new URL(visitorData.url).origin;

// Confirm that the request is from a known origin.

if (

visitorDataOrigin !== req.headers["origin"] ||

!ourOrigins.includes(visitorDataOrigin) ||

!ourOrigins.includes(req.headers["origin"])

) {

reportSuspiciousActivity(req);

return getErrorResponse(

res,

"Origin mismatch detected, potential spoofing attack."

);

}Determining if the visitor is a bot

Once the visitor identifier from the front end is validated, the next step is to check if this visitor is a bot. The botDetection result returned from the Server API tells you if Fingerprint detected a good bot (for example, a search engine crawler), a bad bot (an automated browser), or not a bot at all (aka a human).

{

"bot": {

"result": "bad", // or "good" or "notDetected"

"type": "headlessChrome"

},

"userAgent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) HeadlessChrome/110.0.5481.177 Safari/537.36",

"url": "<https://yourdomain.com/search>",

"ip": "61.127.217.15",

"time": "2024-03-26T16:43:23.241Z",

"requestId": "1234557403227.AbclEC"

}You can decide how to interact if the visitor is a malicious bot. This example blocks the bot's access, but you can also use other approaches. Optionally, you could also update your WAF rules to block the bot's IP address in the future. See our Bot Firewall tutorial for more details.

// Get the bot detection data from the response.

const botDetection = responseData?.products?.botd?.data;

// Determine if the user is a human or a bot.

if (botDetection?.bot?.result === "bad") {

reportSuspiciousActivity(req);

// Optionally: saveAndBlockBotIp(botDetection.ip);

return getErrorResponse(

res,

"Malicious bot detected, bot access is not allowed."

);

}If the visitor passes all the above validation and is either a good bot or a human, continue processing the request as usual and return a successful response.

// If all the above checks pass, return the page.

return getSuccessResponse(res);

});Best practices for implementing bot detection on your website

Fingerprint bot detection is a powerful start to protecting your website from bot attacks comprehensively. Here are some additional best practices to follow when implementing bot mitigation on your website:

- Prioritize high-risk entry points to maximize bot mitigation. These would be your most critical areas to manage, such as login portals, payment gateways, account signup flows, and proprietary valuable content.

- Integrate multi-layered detection like behavior analysis, fingerprinting, and challenges for defense-in-depth.

- Set up comprehensive logging and reporting for bot traffic so you can analyze attack patterns, fine-tune detection rules, and respond to emerging threats.

- Once bot traffic is detected per your policies, actively automate mitigation actions such as rate-limiting, and IP blocking.

Bot detection is a never-ending challenge: Stay ahead of the curve with Fingerprint

Detecting and stopping malicious bots is a persistent challenge for businesses. Fraudsters are constantly developing new techniques to evade the detection of their bots that website owners must keep up with.

With Fingerprint, you can tackle this issue head-on. Our bot detection and other Smart Signals allow organizations to identify and neutralize malicious activity effectively. We are constantly researching new detection techniques. Leveraging our expertise simplifies your web development, eliminating the need to stay updated on the evolving bot detection landscape continually.

Contact our team to learn how you can take action to protect your digital assets from bots. Or try out our web scraping prevention demo to see Fingerprint in action.

FAQ

Businesses can start by conducting a thorough risk assessment to understand their exposure to bot attacks. They can then integrate bot detection tools into their security infrastructure. These tools typically use machine learning algorithms to identify patterns indicative of bot activity. Regular security audits and updates are also crucial to keep up with evolving bot tactics.

If left unchecked, bot attacks can cause significant harm to a business. They can carry out fraudulent activities, compromise sensitive data, disrupt operations, and even damage the business's reputation. The financial implications can be severe, especially if the bot attack leads to a data breach or other form of cybercrime.

Any industry that heavily relies on digital platforms is at risk and more vulnerable to bot attacks. This includes e-commerce, finance, healthcare, and social media platforms. These sectors often handle large amounts of sensitive data, making them attractive targets for malicious bots.

Moreover, the high volume of web traffic they experience can make it harder to distinguish between legitimate users and bots.